Mirvie is a pregnancy health research company using incredible new RNA research to predict problems in pregnancy earlier than ever before. Before working with Cloud303, they were using servers at their offices to perform complex computation, but with the amount of new testing samples coming in constantly growing, they were looking for a scalable option that would allow their research to grow with their company.

Before working with Cloud303, an AWS Premier Consulting Partner, Mirvie was running servers locally at their offices in San Francisco. Due to the growth of the company and the amount of samples they were receiving, this setup was no longer going to meet their needs. They were looking to switch to a scalable platform that would allow them to grow easily and naturally.

Demonstrated Expertise in HPC

Cloud303 possesses specialized expertise in HPC, which is crucial for applications that require complex computational processes. This includes genomics sequencing, molecular modeling, and advanced simulations.

Demonstrated Expertise in HPC

Cloud303 possesses specialized expertise in HPC, which is crucial for applications that require complex computational processes. This includes genomics sequencing, molecular modeling, and advanced simulations.  Robust Infrastructure

The infrastructure provided by Cloud303 is tailored to meet the stringent performance, reliability, and scalability needs of HPC. Our team offers a robust ecosystem that can handle large-scale and intricate computations.

Robust Infrastructure

The infrastructure provided by Cloud303 is tailored to meet the stringent performance, reliability, and scalability needs of HPC. Our team offers a robust ecosystem that can handle large-scale and intricate computations.  Exceptional Support and Security

Cloud303 offers round-the-clock exceptional support, along with proven security protocols, to ensure that the sensitive data and complex workloads are managed in compliance with industry standards.

Exceptional Support and Security

Cloud303 offers round-the-clock exceptional support, along with proven security protocols, to ensure that the sensitive data and complex workloads are managed in compliance with industry standards.  Proven Track Record

Cloud303 has a strong history of successful partnerships within the life sciences industry. Our commitment to excellence, reliability, and client-focused solutions have made us a trusted partner.

Proven Track Record

Cloud303 has a strong history of successful partnerships within the life sciences industry. Our commitment to excellence, reliability, and client-focused solutions have made us a trusted partner. Cloud303's engagements follow a streamlined five-phase lifecycle: Requirements, Design, Implementation, Testing, and Maintenance. Initially, a comprehensive assessment is conducted through a Well-Architected Review to identify client needs. This is followed by a scoping call to fine-tune the architectural design, upon which a Statement of Work (SoW) is agreed and signed.

The implementation phase kicks in next, closely adhering to the approved designs. Rigorous testing ensures that all components meet the client's specifications and industry standards. Finally, clients have the option to either manage the deployed solutions themselves or to enroll in Cloud303's Managed Services for ongoing maintenance, an option many choose due to their high satisfaction with the services provided.

Infrastructure Automation with Terraform

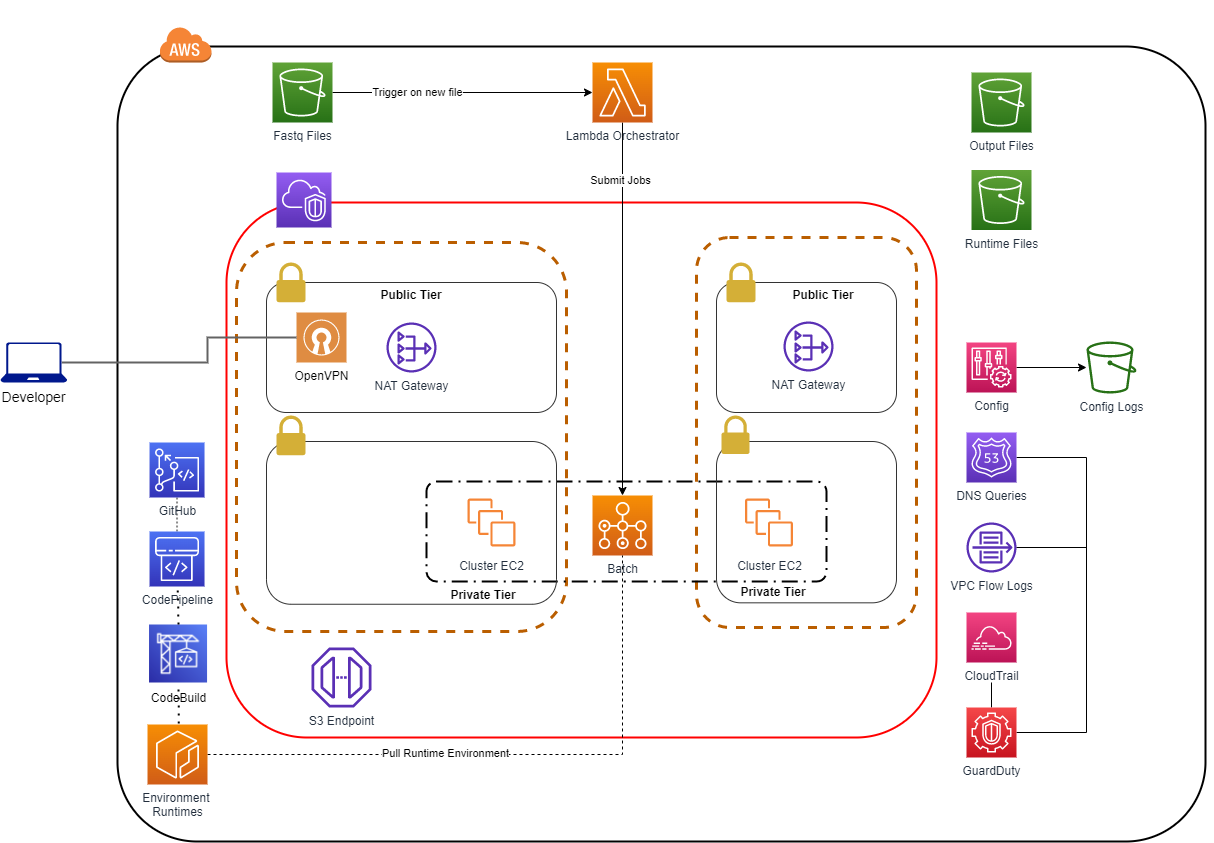

Terraform was used to standardize and automate infrastructure deployment on AWS. Gitlab was used as the version control, with the CI/CD pipeline orchestrated by CodePipeline to automate the development process, sending built containers of the runtime environment to Elastic Container Registry (ECR) and the necessary NextFlow job files to S3.

Comprehensive Testing Across Environments

End-to-end (e2e) testing strategy was used across all environments (dev, stage, prod) to check whether the workflow functioned as expected across the entire stack and architecture, including integrating all micro-services and components that are supposed to work together.

Version Control and Monitoring

One important aspect of this build was strict version control so jobs could be closely tracked. Commit IDs from the source repository were used to tag builds and NextFlow code, so every file was associated with a unique identifier that could be monitored. CodePipeline is also part of this effort - updating parameters in SSM Parameter Store to help keep track of the current build.

Various tests - such as unit, functional, load and integration testing - were automated and run in parallel to get faster and earlier feedback on continuously integrated code.

HIPAA-Compliant Bioinformatics Pipeline

Cloud303 used an S3 bucket for storage and AWS Batch to orchestrate Mirvie’s computing environment to run NextFlow jobs for their bioinformatics pipeline, and to do all of this under the HIPAA compliance umbrella so that their clients’ data was protected at the highest level.

Cloud303 started by taking Nextflow scripts that Mirvie was already using and putting them into the cloud to run through AWS Batch. Since the goal of this deployment was cost optimization in addition to greater power and efficiency, the goal was to leverage spot instances, rather than on-demand instances, to perform compute tasks due to their excellent value.

NextFlow is designed for parallel scientific computing, but it is not normally able to cope with compute nodes appearing and disappearing, as they can do when working with spot fleets. By utilizing S3 to preserve the application’s state, Cloud303 designed a head node with the ability to resume processes and retry jobs that were dropped, thereby allowing NextFlow to run in an environment of unknown consistency, vastly increasing both its flexibility and affordability as a parallel computing platform in the cloud.

Ephemeral Data Handling and Encryption

Nodes share an EFS volume so they have a common ephemeral data directory to work with, though staged files is copied locally to increase overall speed. The whole workload is encrypted using customer-managed KMS keys (S3, EFS, local volumes). Secrets are managed by SSM Parameter Store. When files for a job are submitted, a text file must be included as well including various details necessary for the job to be completed successfully. S3 Events are used to monitor for those file submissions and, when those files are uploaded, a Lambda function is triggered to configure the job and get all the data where it needs to be. By using S3 Events and Lambda in this way, there is no need for a persistent running server to monitor for new jobs, which further helps with cost savings.

Once the job is complete, output files are stored in an S3 bucket where they can be viewed and downloaded by whomever needs them.

By utilizing spot instances and removing the need for continuously running servers, Cloud303 created a parallel computing deployment much less expensive than even one running on-demand EC2 instances, let alone dedicated on-prem servers.

Comprehensive Auditing and Logging

Mirvie has a twofold need for robust audit tracking and logging: their own internal needs and HIPAA’s compliance requirements. So from the beginning of the process until the end, Cloud303 ensured that Mirvie was auditing everything and keeping logs of everything happening on the cloud. To achieve this, Cloud303 implemented AWS Cloudtrail and AWS Config to make sure that all API calls, as well as all configuration changes, were recorded.

All those logs recorded in the individual services and application logs are sent to CloudWatch Logs and then to S3 for long-term storage. They remain in standard storage for 30 days in case they need to be queried by Athena, and then they are moved to long-term storage in Glacier, where they remain for 6 years, in accordance with HIPAA regulations. The buckets also have S3 Server Access Logs enabled, so any attempts to view log data are recorded.

CloudWatch Alarms were also used to create budget alarms so Mirvie could be confident in their budget without manually checking their monthly bill.

We had the opportunity to architect a robust, HIPAA-compliant system that not only met but exceeded Mirvie's expectations. The migration to AWS allowed Mirvie to focus on their groundbreaking RNA research. This is the future of bioinformatics, and we're excited to be a part of it.

By moving their bioinformatics processing from on-prem to AWS, Mirvie successfully reduced processing time by about 50% - from 16 hours to 8 hours, while Spot Instances enabled Mirvie to cut cost by up to 70% compared to on-demand instances. With the scalability AWS offers, Mirvie have been able to successfully increase the number of clients they can accommodate without worrying about compute resources. Mirvie has seen a 70% increase in its workload, going from around 50 clients to about 70 clients, and despite this, their platform is still affordable due to its design using microservices (ECS) and serverless (Lambda) AWS technologies, in addition to Spot Instances as the Nextflow/AWS Batch jobs could afford interruptions.